What You Should Know:

– John Snow Labs, the AI for healthcare company, today announced the release of Automated Responsible AI Testing Capabilities in the Generative AI Lab.

– This is a first-of-its-kind no-code tool to test and evaluate the safety and efficacy of custom language models. It enables non-technical domain experts to define, run, and share test suites for AI model bias, fairness, robustness, and accuracy.

Comprehensive Testing Solutions for AI in Healthcare and Life Sciences

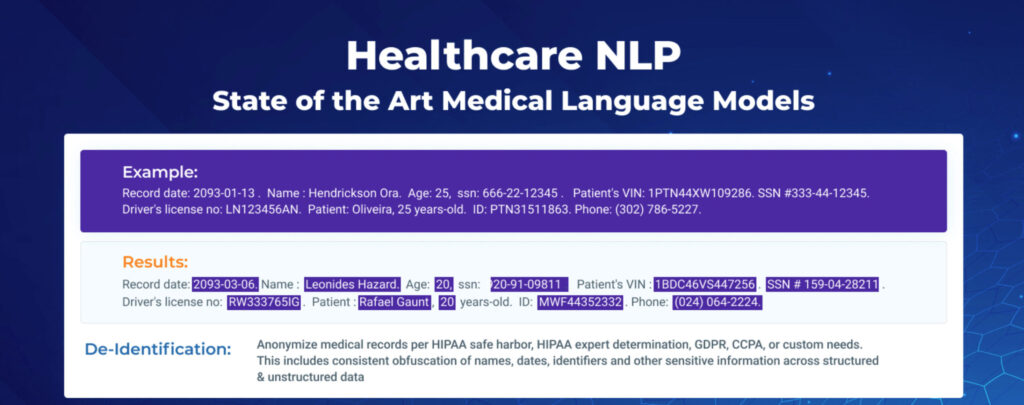

John Snow Labs, a leading AI company for healthcare, provides advanced software, models, and data to enhance AI application in healthcare and life science organizations. The company is renowned for developing Spark NLP, Healthcare NLP, the Healthcare GPT LLM, the Generative AI Lab No-Code Platform, and the Medical Chatbot. Their award-winning medical AI software supports top pharmaceuticals, academic medical centers, and health technology companies globally.

John Snow Labs’ capabilities are built on the open-source LangTest library, which includes over 100 test types for various aspects of Responsible AI, such as bias, security, toxicity, and political leaning. LangTest leverages Generative AI to automatically generate test cases, allowing for a comprehensive set of tests to be produced in minutes rather than weeks. Designed specifically for testing custom AI models, LangTest addresses scenarios not covered by general-purpose benchmarks and leaderboards.

Recent US legislation has made comprehensive testing essential for companies aiming to release new AI-based products and services:

– The ACA Section 1557 Final Rule, effective June 2024, prohibits discrimination in medical AI algorithms based on race, color, national origin, gender, age, or disability.

– The HTI-1 Final Rule on transparency in medical decision support systems mandates companies to demonstrate how their models are trained and tested.

– The American Bar Association Guidelines require extensive internal and third-party audits before AI deployments, responding to lawsuits against companies providing models that match job descriptions with candidates’ resumes.

There is an urgent need for comprehensive testing solutions for Large Language Models (LLMs). Many domain experts lack the technical expertise to perform such testing, and many data scientists lack the domain expertise to build comprehensive, industry-specific models. The Generative AI Lab addresses this gap by enabling domain experts to create, edit, and understand model testing without the need for a data scientist. The tool incorporates best practices, including versioning, sharing, and automated test execution for every new model.