BOSTON — On a Friday morning in July, an internal medicine resident at Beth Israel Deaconess Medical Center stood up in front of a crowded room of fellow trainees and laid out a case for them to solve. A 39-year-old woman who had recently visited the hospital had felt pain in her left knee for several days, and had developed a fever.

Zahir Kanjee, a hospitalist at Beth Israel, flashed the results of the patient’s labs on the screen, followed by an X-ray of her knee, which had fluid buildup around the joint. Kanjee tasked the residents with presenting their top four possible diagnoses for the patient’s condition, along with questions about the patient’s medical history and other examinations or tests they might pursue.

advertisement

But before they split into groups, he had one more announcement. “We’re going to give you this thing called GPT-4,” he said, “and you’re going to take a few minutes and you can use it however you want to see if it might help you.”

Officially, large language models like GPT-4 have barely entered the realm of clinical practice. Generative AI is being used to help streamline medical-note taking, and explored as a way to respond to patient portal messages. But there’s little doubt that such models will have a far bigger footprint in health care going forward.

“It’s going to be utterly, utterly transformative, and medical education is not ready,” said Adam Rodman, a clinical reasoning researcher who co-directs the iMED initiative at Beth Israel. “And the people who have realized what a big deal it is are all kind of freaking out.”

advertisement

At BIDMC, educators like Rodman and Kanjee are doing their best not to panic, but to prepare. At the health system’s workshops for medical residents, they have started to ask trainees to test the limits and potential of artificial intelligence in their work.

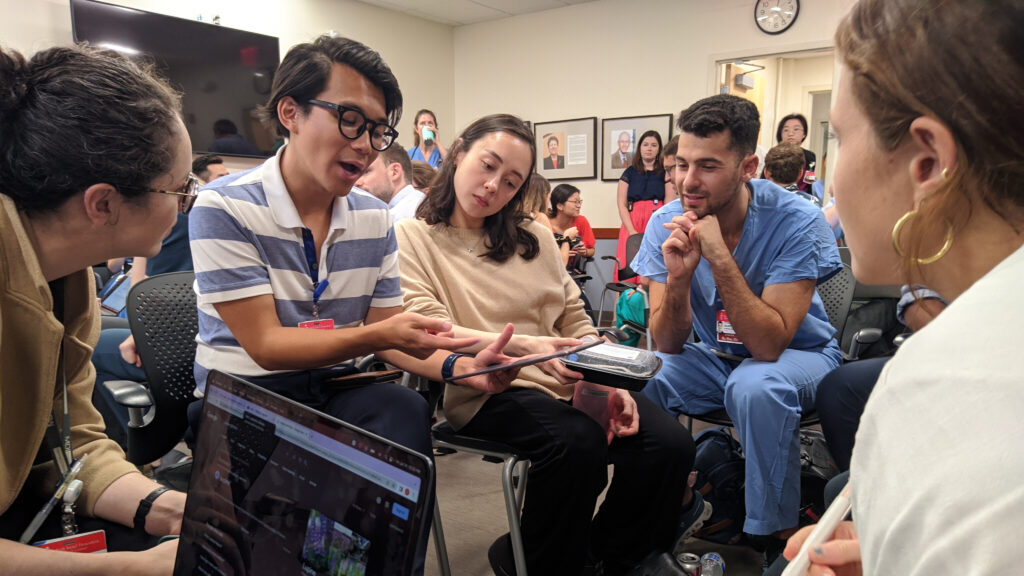

It was one of those workshops that brought internal medicine residents to a sterile, square room on the third floor of one of the oldest buildings at the Boston hospital. The residents scarfed down free lunch — pad thai and stir-fry — as they discussed potential diagnoses and how they’d approach the case.

“Septic joint, that’s my approach,” said one resident. “Septic until proven otherwise.” Others thought it might be gout, or gonorrhea, or Lyme disease.

After several minutes, Kanjee told them to open up GPT-4, and give it a shot.

It wasn’t the first time BIDMC had thrown GPT-4 into the arena. In April, Rodman helped design the first test of the AI model at Beth Israel’s biannual clinicopathological conference, or CPC. It’s a format that has been a mainstay of medical education for a century, allowing trainees to test their diagnostic reasoning against a case study.

“For this conference, we said, what if we ask Dr. GPT to fill in the place of one of the humans and see how it does, pitting it against the humans,” said Zach Schoepflin, a clinical fellow in hematology who ran the conference.

Schoepflin and Rodman fed GPT-4 six pages of details about the real, years-old clinical case, and then asked the doctors and the program for their top three diagnoses, the reasoning behind their top guess, and the test that would lead to that diagnosis. GPT-4 had never seen the case before, and it started typing out its answer within seconds.

It wasn’t a slam dunk: GPT-4 misdiagnosed the patient with endocarditis, instead of the correct — and far rarer — diagnosis of bovine tuberculosis. But humans in the room had considered endocarditis too, as had the patient’s original care team. And most importantly, “it had really good logic and defended its answer,” said Rodman. Its performance mirrored his recent work — published with Kanjee and Solera Health CMO Byron Crowe — that found the chatbot got the right diagnosis in challenging cases 39% of the time, and had the right answer in its list of possible diagnoses 64% of the time.

“I think that’s one of the reasons that I scared my program leadership into taking this seriously, because they saw how well it performed,” said Rodman.

But that doesn’t mean GPT-4 is ready to serve as a diagnostic chatbot — far from it. “The takeaway is that GPT-4 is likely able to serve as a thought partner or an adjunct to an experienced physician who is stumped in a case,” said Rodman.

The question is how carefully doctors can learn to incorporate its outputs, because in general, “doctors tend to be very thoughtless when it comes to how we use machines,” said Rodman.

At the Friday workshop, third-year resident Son Quyen Dinh’s group used it like a “fancy Google search,” asking GPT-4 for a list of differential diagnoses. They also asked how it would respond to changes in the patient’s age or test results and what lab tests it thought the team should order next. Other groups asked the chatbot to explain its diagnoses, or to check their own impressions, like they would with a colleague.

Overall, the residents were impressed with GPT-4’s performance, but quickly noticed several shortcomings. Aaron Troy, a third-year resident, noted that knowing that GPT-4’s information is unreliable reinforced his biases. Doctors are used to looking at reliable sources like the medical literature, and knowing it’s right. But when faced with potentially unreliable outputs from generative AI, his gut reaction was to trust the AI most on its ideas that aligned with his own.

Another resident, Rachel Simon, asked Dinh’s group about GPT-4’s choice to rank gout as a likelier diagnosis when told the patient was a young woman. “Just a question,” she said, “because that feels weird.”

“That’s bizarre,” said Simon.

“That is bizarre…” said Dinh.

“And wrong,” replied Simon, and the room erupted into laughter. The right diagnosis? Lyme disease, which was on several of the residents’ minds before they consulted the AI.

Residents in the room realized that they still needed their medical knowledge to operate GPT-4 — whether it was knowing what information to prioritize so they could ask the right questions, critically parsing its answers to be able to say it was wrong, or knowing that its answers were too broad to actually be helpful.

And the workshop provided an opportunity to talk about other concerns with LLMs — like the tendency to make facts up, the limitations of the data the models are trained on, or the open questions about how they are making decisions.

Kanjee also warned residents that these AI models aren’t HIPAA compliant, meaning physicians should never put personal health information into them. While the patient used in the workshop was real, the workshoppers changed several of the case’s details to protect her privacy.

To the educators, perhaps the most important issue in bringing LLMs into medical education is making sure that the technology doesn’t come at the cost of physician understanding. Part of learning, after all, is grappling with the material to make it stick. “When you outsource your thinking to a machine, in some ways you are giving up the opportunity to learn and retain things,” said Kanjee.

Rodman shares those concerns, but said that engagement with the technology is the only way forward.

“It may be that it’s a disaster,” said Rodman. “But the point is that we’re trying to integrate these tools now and have these open discussions so the residents, at least they have the experience of asking, ‘How much can I trust these things? Are they useful? What are the best practices?’ Understanding that there’s no answers for those things right now. But there are things that we need to be talking about.”

The health system’s experiences so far suggest that the field of medicine will soon need guidelines for the use of large language models. Rodman thinks it won’t work to build them from scratch, institution by institution. Instead, he hopes that medical societies like the Accreditation Council for Graduate Medical Education, American College of Physicians, or American Medical Association will take the lead on comprehensive best practices for both medical education and practice.

“I hope that this gets medical educators to take this technology seriously, and start thinking about what we all need to do in order to understand how to train doctors with this technology,” said Rodman. “Because you can’t turn back the clock.”

For now, at least BIDMC’s residents are seriously contemplating the future of AI-enabled medical practice — with all its risks and advantages. After seeing the diagnoses that GPT-4 produced, first-year resident Isla Hutchinson Maddox begrudgingly acknowledged the tool’s skills.

“This is kind of impressive,” she said, surprised. “My job is gone!”

In all seriousness, she said, she isn’t convinced AI could replace a doctor. “So often people just want to be heard,” she said. “I talked to a 75 year-old guy yesterday for 30 minutes just because he needed to be heard about his arthritis. And that wouldn’t happen if I used this.”

Then she had another thought: “But maybe if I use this, I can free up more time for him.”

This story is part of a series examining the use of artificial intelligence in health care and practices for exchanging and analyzing patient data. It is supported with funding from the Gordon and Betty Moore Foundation.